IOPS. IOPS. IOPS.

It’s the bragging right of the flash SSD world. And vendors go to obsessive lengths to talk about it. Check out the wiki page for IOPS. Note at the bottom of the page and in the edit history of the page how the SSD makers are falling over each other to make sure that the world knows about how many IO operations per second their products can do.

And they report it differently. Let’s review.

Chip Makers

Micron, Hitachi, SanDisk and a few other companies actually make the NAND chips. Easy for them and not so debatable – a chip clearly says it can do so many reads/sec and so many writes/sec. Fun starts when people add their software magic to make SSDs.

SSD appliance makers sometimes quantify the IOPS rating of the controller while other times they simply add up the IOPS rating of the SSDs. But there are some impressive claims.

However, the moment you use SSDs in a shared appliance, what matters most is concurrent bandwidth, not just the raw IOPS. It does not really matter whether you call the offering an enterprise flash array or a SAN/NAS flash storage appliance or a flash memory array – a shared environment requires a LOT more concurrent bandwidth than a dedicated server attached pipe.

A Simple Metric

So, we have SSD appliances in the market with ratings of hundreds of thousands of IOPS. But what about concurrency?

Storage controller designers traditionally did not have to worry about too many concurrent hosts. After all, if all you have is a storage media capable of few hundreds or low thousands of IOPS then what’s the point of sharing them with multiple servers.

On the other hand the raison d’être of SSD appliances are huge amount of IOPS – and attached to a network – they beg to be shared.

A single multi-core server can push bursts of 50,000 IOPS. A blade-center or a pretty pedestrian collection of four servers or a mid-range server (such as a Dell R710) can easily put out burst loads of 200,000 IOPS. And on a SAN or NAS – they are not exactly 512-byte mouselings. Consider these:

- Normal file-system buffer size – 4K Bytes – @ 200,000 that is 0.8 GB/sec per server. For four mid-range servers that is 3.2 GB/sec.

- What if it is not just file system work but you have MySQL running on web-layer – default 8K Bytes – now we’re at 6.4GB/sec with 4 servers @ 200K IOPS each.

- Vendors eagerly push their SSD appliances for databases. Oracle with table-scans (data warehouse, DSS) will have default block size of 32K Bytes or higher. We’re talking 25GB/sec bursts.

You think a server can not push 6GB/sec of IO in that last example? An Intel Sandy Bridge server IO slot is PCI-gen3x8. Four 16G FC ports can push that IO easily and the memory bus has enough bandwidth to absorb it.

A shared SAN SSD appliance sees a very different kind of load mix than a PCI-e SSD card or a PCI-e direct-attached dedicated appliance. There is a reason there are NO TPC-H database benchmarks with SAN SSD appliances (as of March 2012). Go ahead. Check it out. There are several benchmarks floating around for direct-attached PCI-e SSD or PCI-e SSD appliances doing well on transaction processing applications. That’s single use – small IO. They hardly get more civilized than that.

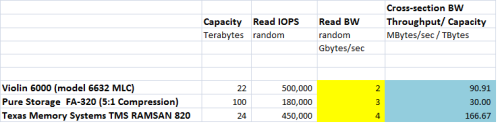

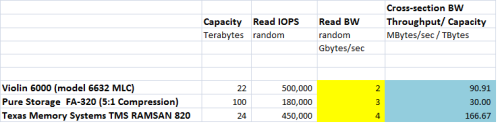

Here are the performance specs of the three SAN SSD appliances.

- Spec downloaded from respective vendor’s website as of March 28, 2012.

Please let us know if you find any errors or discrepancies and we’ll make every effort to correct them promptly.

The last column is the Cross-section bandwidth of Storage and it is a simple metric obtained by dividing the bandwidth of the connectivity of the storage to the total capacity of the storage. The connectivity bandwidth is either the bandwidth of the network connections or the bandwidth of the flash attachment network – whichever one is the dominant part.

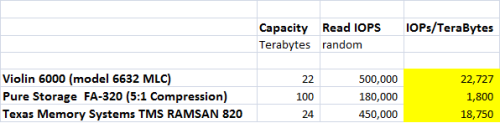

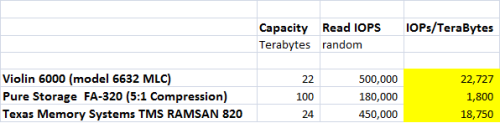

Compare these numbers with another SSD appliance metric:

The guys from the lone-star state make a great product with a proud history and happy users. It’s built like the proverbial brick outhouse and their hardware specs are top-notch.

They were #2 in IOPS/TB and #1 in cross-section bandwidth in this comparison.

The Violin specs are a tie with #1 in IOPS/TB and #2 in cross-section bandwidth. It’s got plenty of network connectivity (8x 8G Fibre Channel) but the MLC array is specified at a lower 2GB/sec. It’s a well balanced design and boasts of some very nitty-gritty details built ground up with loving care.

The rearguard of these three is Pure Storage and the numbers look alarmingly low initially until you look at the foundation technology of this particular vendor. The somewhat low numbers are a direct artifact of using de-dupe/compression to meet you capacity goals.

Coming soon – Server vs. Array Flash – a Suitability Analysis…

Even the

Even the